human4d_dataset

HUMAN4D: A Human-Centric Multimodal Dataset for Motions & Immersive Media

HUMAN4D constitutes a large and multimodal 4D dataset that contains a variety of human activities simultaneously captured by a professional marker-based MoCap, a volumetric capture and an audio recording system.

The related paper can be found here in PDF.

You can download the dataset from Zenodo (in various parts):

- https://zenodo.org/record/4473009#.YH6nvOgzaUk

- https://zenodo.org/record/4473047#.YH6n1egzaUk

- https://zenodo.org/record/4483817#.YH6n5OgzaUk

- https://zenodo.org/record/4484110#.YH6n-ugzaUk

For data that are not publicly available but are included in the HUMAN4D dataset, contact us @ tofis3d [at] gmail.com.

Pictures taken during the preparation and capturing of the HUMAN4D dataset. The room was equipped with 24 Vicon MXT40S cameras rigidly placed on the walls, while a portable volumetric capturing system (https://github.com/VCL3D/VolumetricCapture) with 4 Intel RealSense D415 depth sensors was temporarily set up to capture the RGBD data cues.

Pictures taken during the preparation and capturing of the HUMAN4D dataset. The room was equipped with 24 Vicon MXT40S cameras rigidly placed on the walls, while a portable volumetric capturing system (https://github.com/VCL3D/VolumetricCapture) with 4 Intel RealSense D415 depth sensors was temporarily set up to capture the RGBD data cues.

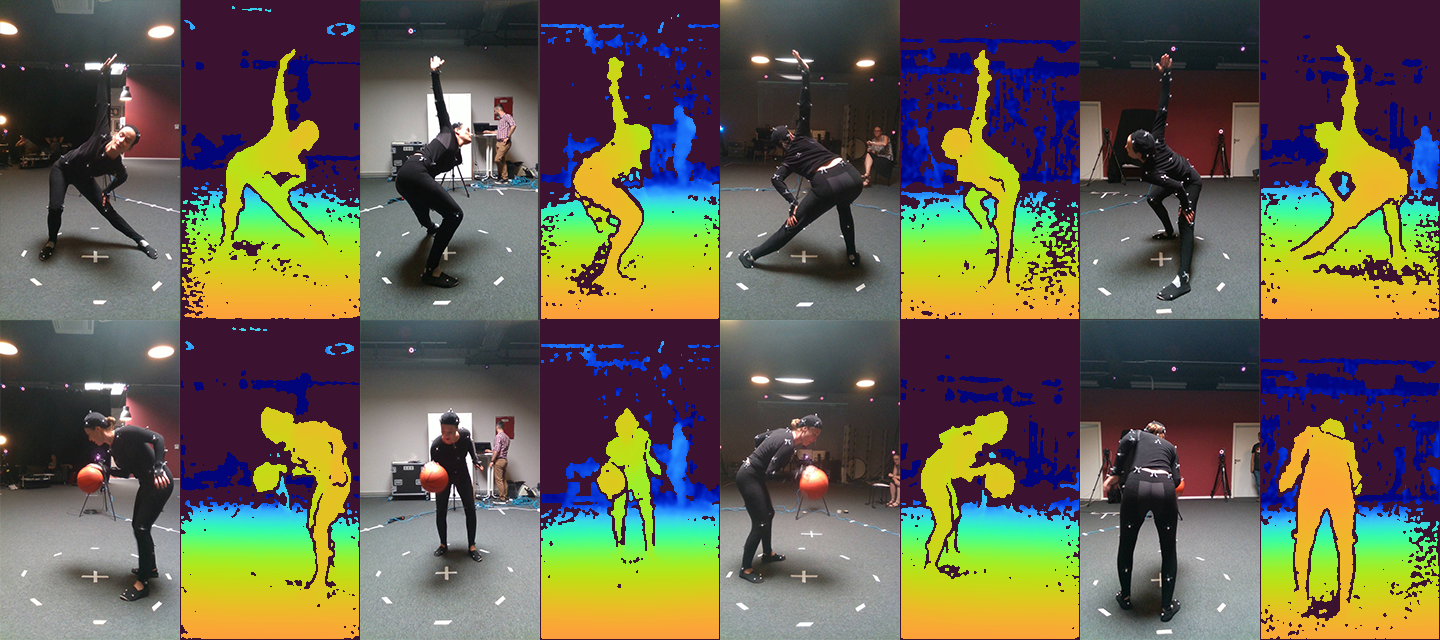

HW-SYNCed multi-view RGBD samples (4 RGBD frames each) from “stretching_n_talking” (top) and “basket-ball_dribbling” (bottom) activities.

HW-SYNCed multi-view RGBD samples (4 RGBD frames each) from “stretching_n_talking” (top) and “basket-ball_dribbling” (bottom) activities.

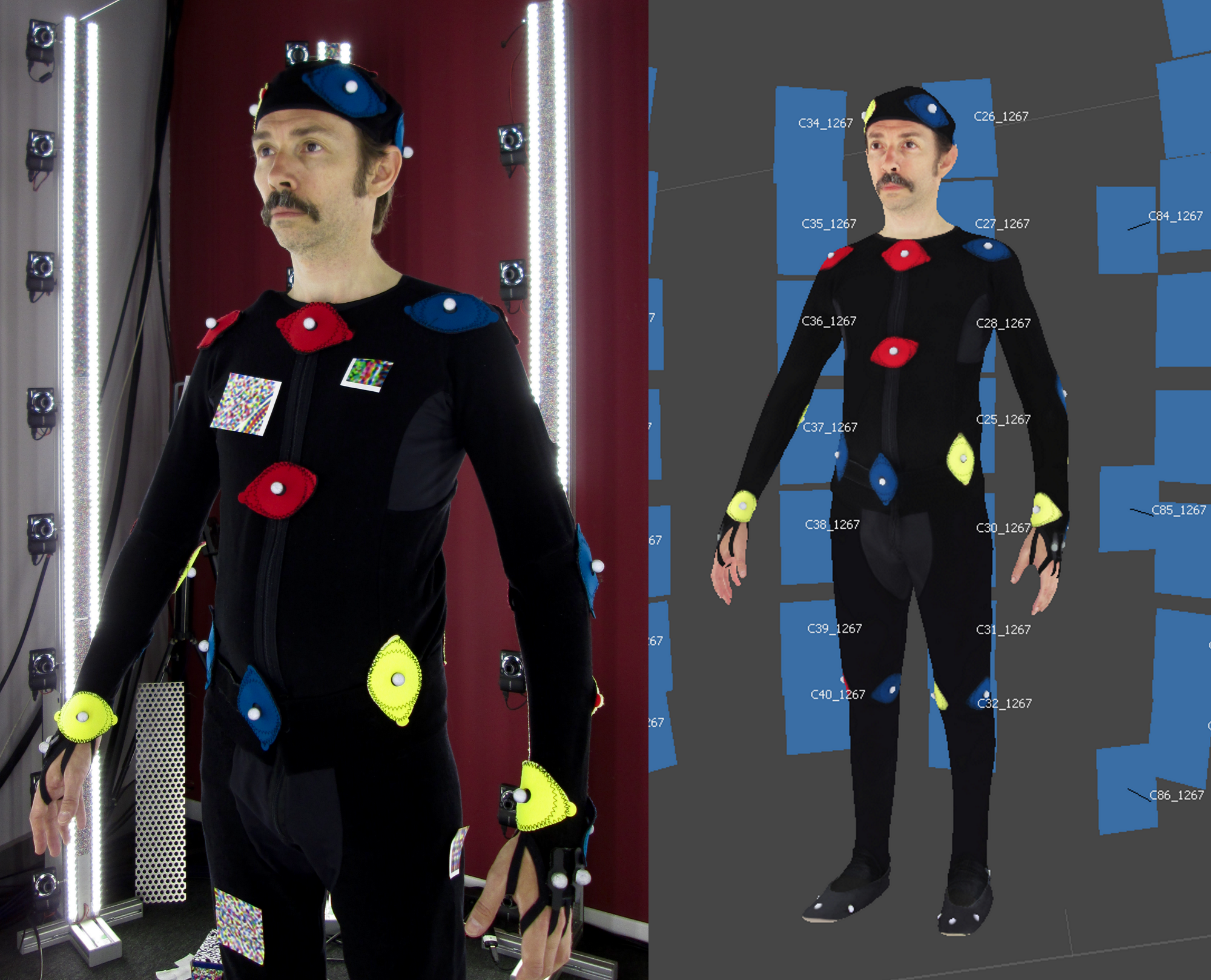

3D Scanning using a custom photogrammetry rig with 96 cameras, photos were taken of the actor (left) and reconstructed into a 3D textured mesh using Agisoft Metashape (right).

3D Scanning using a custom photogrammetry rig with 96 cameras, photos were taken of the actor (left) and reconstructed into a 3D textured mesh using Agisoft Metashape (right).

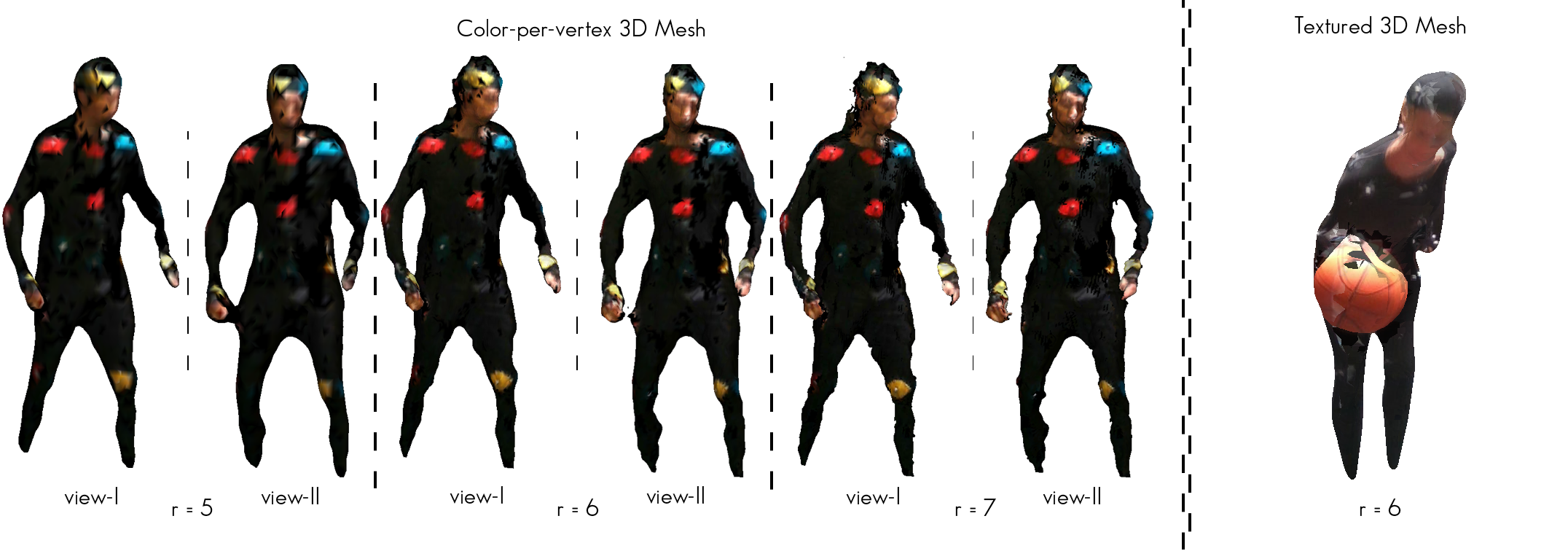

Reconstructed mesh-based volumetric data with (Left) color per vertex visualization in 3 voxel-grid resolutions, i.e. r= 5, r= 6 andr= 7 and (Right) textured 3D mesh sample in voxel-grid resolution for r= 6.

Reconstructed mesh-based volumetric data with (Left) color per vertex visualization in 3 voxel-grid resolutions, i.e. r= 5, r= 6 andr= 7 and (Right) textured 3D mesh sample in voxel-grid resolution for r= 6.

Merged reconstructed point-cloud from one single mRGBD frame from various views.

Merged reconstructed point-cloud from one single mRGBD frame from various views.

If you used the dataset or found this work useful, please cite:

@article{chatzitofis2020human4d,

title={HUMAN4D: A Human-Centric Multimodal Dataset for Motions and Immersive Media},

author={Chatzitofis, Anargyros and Saroglou, Leonidas and Boutis, Prodromos and Drakoulis, Petros and Zioulis, Nikolaos and Subramanyam, Shishir and Kevelham, Bart and Charbonnier, Caecilia and Cesar, Pablo and Zarpalas, Dimitrios and others},

journal={IEEE Access},

volume={8},

pages={176241--176262},

year={2020},

publisher={IEEE}

}